In 2025, AI toys have become one of the hottest tracks in the global consumer technology field. The rise of DeepSeek has significantly reduced the deployment cost and threshold of large models, successfully igniting the enthusiasm of the entire industry and capital market for edge-side AI implementation, and further promoting the upsurge of AI toys.

AI Toys with Large Model Access

From the perspective of market demand, AI toys are redefining the way of children's companionship and education. They not only meet the basic need for "play" but also provide rich emotional value for children through intelligent "anthropomorphic" interaction. Data shows that the global AI toy market scale reached 18.1 billion US dollars in 2024 and continues to expand at an annual compound growth rate of 16%. It is expected to exceed 60 billion US dollars by 2033. More and more manufacturers are actively deploying in the AI toy field. However, how to stand out in the fierce market competition and create a differentiated product with comprehensive functions and excellent user experience has become the key for enterprises to successfully break through.

Offline-Online Speech Large Model Solution Demonstration for AI Toys

Chipintelli, which has been deeply engaged in the intelligent speech industry for ten years, now strongly launches the AI toy offline-online speech large model solution, which has the following five major advantages compared with the existing industry solutions:

01 Natural Interaction and Privacy Protection with Voice Wake-up

At present, most AI toys still rely on touch buttons to initiate conversations, with rigid interaction and affected user experience; or they continuously monitor and upload data to the cloud, which may leak user privacy and is difficult to gain the trust of parents.

This solution integrates an edge-side VAD (Voice Activity Detection) algorithm based on DNN (Deep Neural Network), which can directly wake up through voice, avoiding complex operations. The AI toy real-time detects the user's voice input and only uploads it to the large model for cloud processing when effective voice is detected, balancing natural interaction and privacy protection.

02 Strong Real-time Performance and Smooth Interaction

Latency is a key factor affecting the user experience. When the speech interaction latency exceeds 1 second, users will feel obvious lag, which significantly reduces the smoothness of interaction and increases user anxiety.

This solution deploys speech data processing to the chip side through the edge-side VAD algorithm based on DNN and deep noise reduction algorithm, ensuring that the uploaded to the cloud is processed high-quality speech data, avoiding the response latency caused by redundant data processing and large-scale computing tasks in the cloud, and meeting the real-time interaction needs of children and devices.

03 High Recognition Accuracy and Adaptation to Complex Noise Environments

Most current AI toys lack speech noise reduction functions, resulting in low speech recognition accuracy in noisy environments, and children need to repeat instructions, affecting the user experience. This solution uses deep noise reduction technology based on DNN, with stronger adaptability and generalization ability, which can maintain excellent noise reduction effects in different noise environments, providing cleaner speech for the cloud large model and greatly improving the speech recognition accuracy of the cloud large model.

04 Real-time Interruption for Improved Interaction Efficiency

Most current AI toys can only respond to new instructions after the answer ends, with low interaction efficiency. This solution is based on echo cancellation technology combined with the VAD algorithm, which can effectively suppress echoes and realize the real-time interruption function, allowing children to continue entering voice instructions without long waiting, ensuring the smoothness and immediacy of the user experience.

05 Directional Interaction for an Immersive Human-Machine Interaction Experience

General AI toys lack the perception of the sound source direction and cannot establish natural attention interaction with users, resulting in a single interaction experience. This solution is based on the DNN sound source localization algorithm, which can realize directional interaction in multiple scenarios. The device can perceive the sound direction of children and actively "look" at the user or move towards the user direction, simulating real interaction scenarios and enhancing the naturalness and fun of interaction.

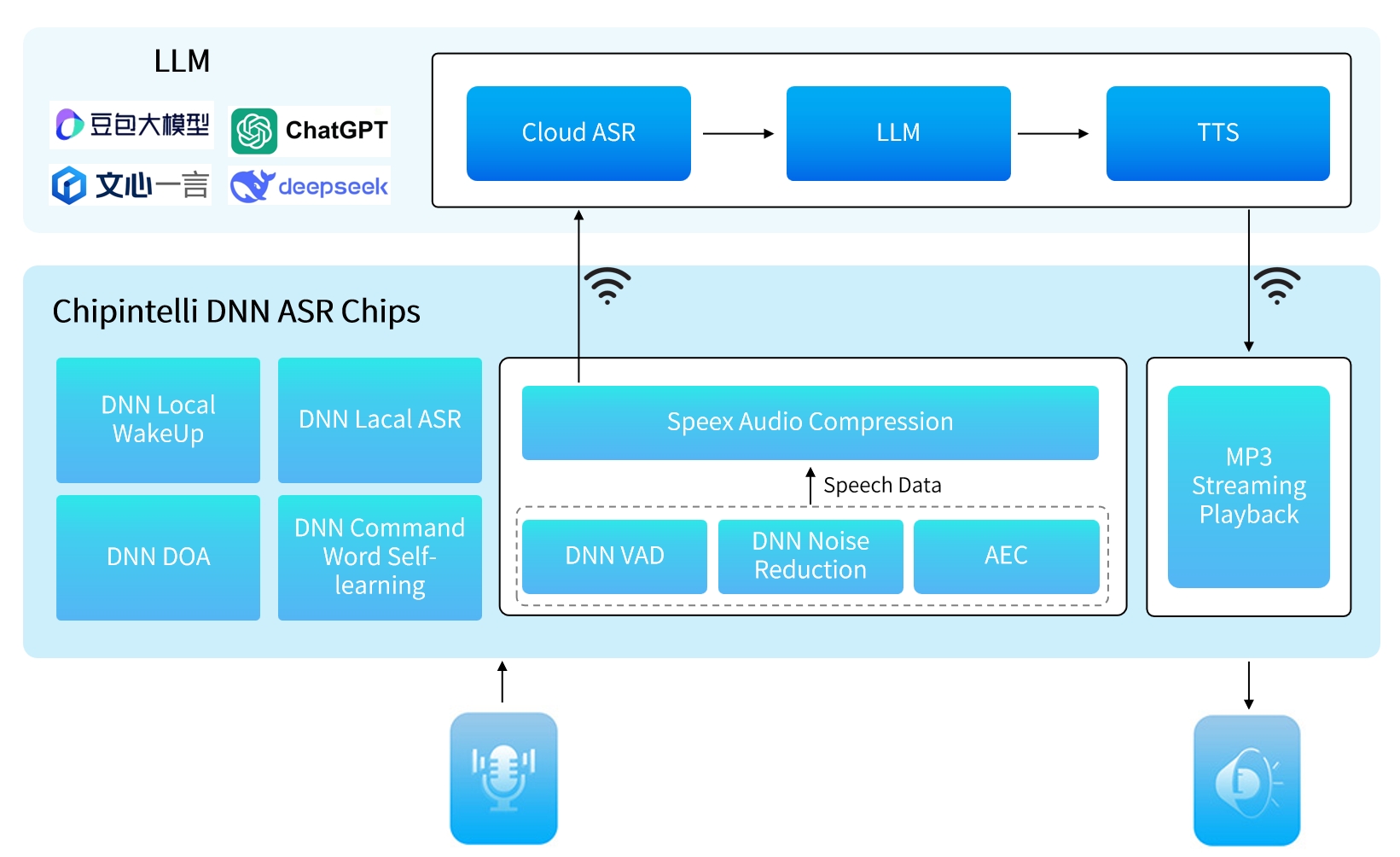

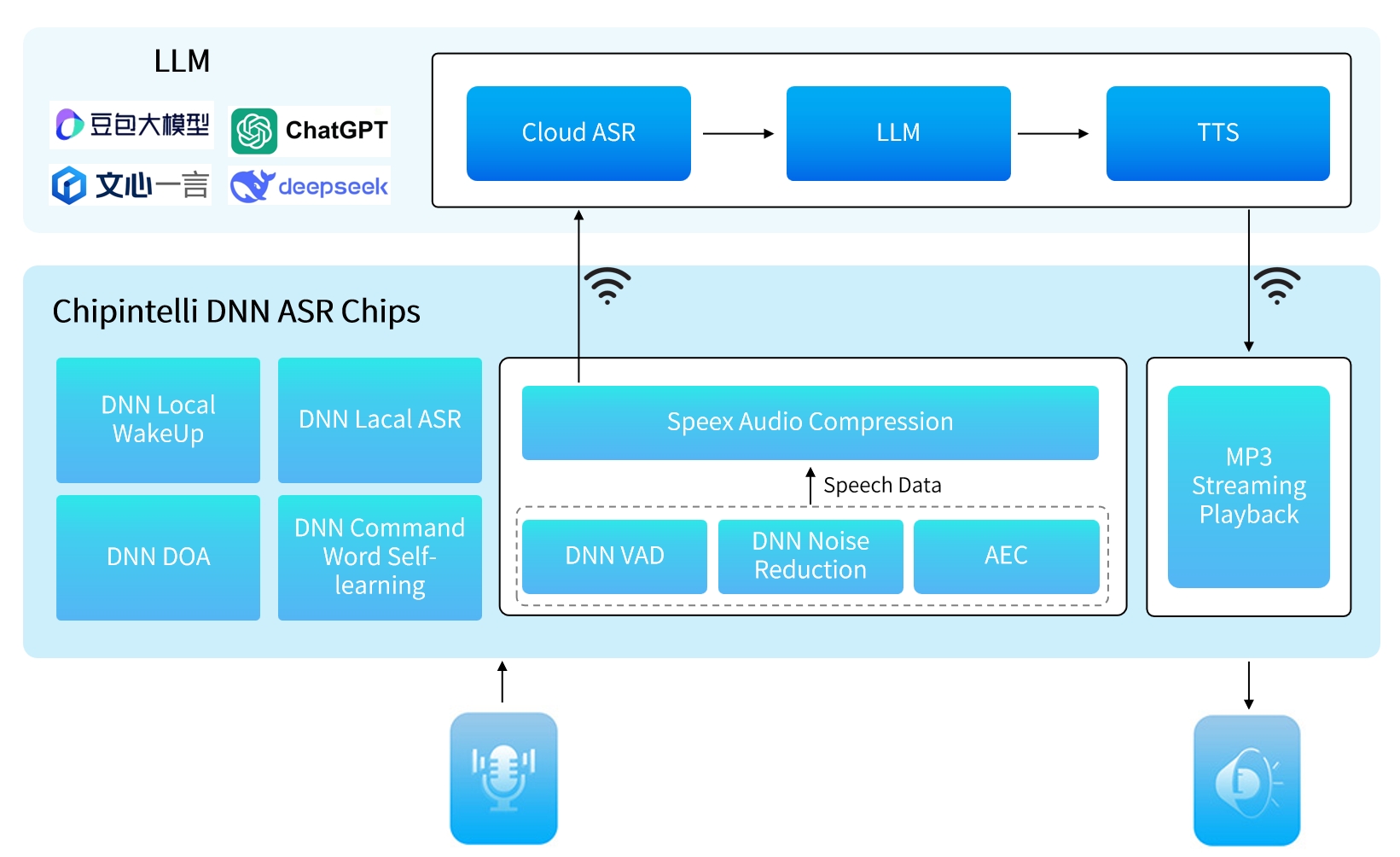

Functional Block Diagram of Chipintelli's AI Toy Offline-Online Speech Large Model Solution

Chipintelli has now launched AI toy offline-online speech large model solutions suitable for CI1302, CI1303, CI1306 offline AI speech chips and CI2305, CI2306 AI speech Wi-Fi Combo chips.

● CI1302、CI1303

Based on these chips, Chipintelli provides local voice wake-up and speech recognition based on DNN, deep speech noise reduction, endpoint detection, command word self-learning, echo cancellation, and also provides functions such as Speex, Opus data compression, and MP3 streaming media playback. Chipintelli provides a front-end function SDK, and users can carry out cloud development based on independently selected WIFI.

This solution uses a single microphone, has relatively low structural requirements, and is suitable for various AI toys.

● CI1306

Based on this chip, Chipintelli provides local voice wake-up and speech recognition based on DNN, deep speech noise reduction, endpoint detection, sound source localization, echo cancellation, and also provides functions such as Speex, Opus data compression, and MP3 streaming media playback. Chipintelli provides a front-end function SDK, and users can carry out cloud development based on independently selected WIFI.

This solution uses dual microphones, can realize sound source localization, and is suitable for AI toys, desktop pets, etc. that require directional interaction.

● CI2305、CI2306

Based on these chips, Chipintelli provides speech and WIFI-related functions. Speech functions include local voice wake-up and speech recognition based on DNN, deep speech noise reduction, endpoint detection, sound source localization, echo cancellation, and also provides functions such as Speex, Opus data compression, and MP3 streaming media playback. WIFI functions include recording data and playback data forwarding, BLE Bluetooth networking, part of the product function logic, and support for TCP/MQTT/UDP network transmission protocols. Chipintelli provides an SDK for chip docking with cloud platform service providers, and users can carry out edge function development based on this SDK.

This solution integrates WIFI, which can reduce the cost of the entire solution. It includes single-microphone and dual-microphone solutions, and the dual-microphone can realize directional interaction.

Chipintelli's AI toy offline-online speech large model solution, based on Chipintelli's neural network speech chip, supports OTA (Over-The-Air) upgrade and can also be used in products such as smart home appliances, AI education, elderly care, and robots, comprehensively solving industry pain points and providing manufacturers with a highly competitive differentiated solution.